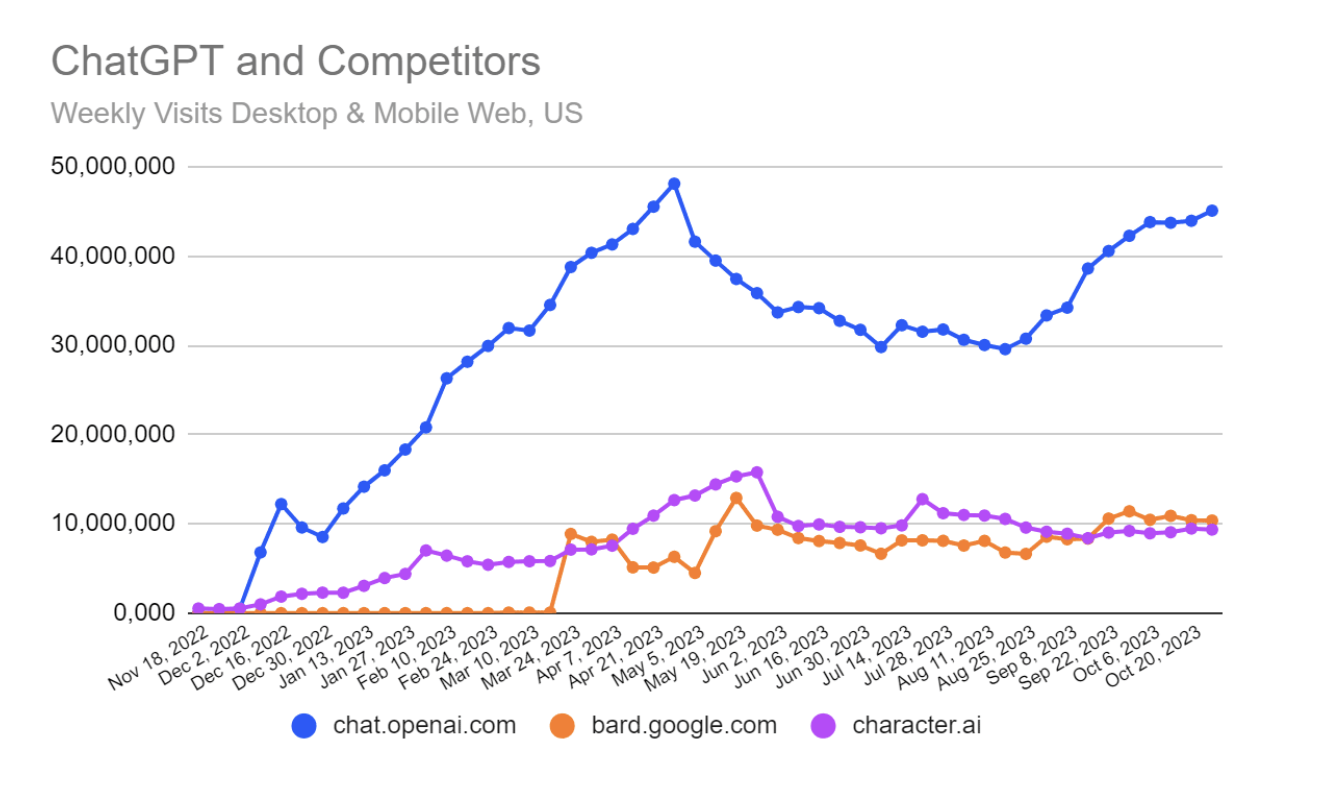

Generative Artificial Intelligence (AI) companies have built their brands as tools of productivity, but their success in the marketplace relies on them becoming addictions.

By addiction, I don’t mean one of substance abuse and craving, but an inability for users to perform certain functions without the product. I walk around the halls of my university and see every laptop1 open to ChatGPT tools, ready to type an 800-word class reflection for their users. We currently live in a Guinea Pig era of AI models clashing with traditional education methods. Educators, from grade school to graduate-level courses, seem to lack consensus on how to respond to this disruptive technology, but the primary solution I’ve seen is a kind of surrender. Most professors add an AI clause into their syllabus that reads along the lines of “You can use AI tools, just cite them appropriately.”

Traditional education, needing to operate at scale for millions of students, abandoned intimacy and nuance for evaluating individual ability centuries ago. Most education credentials are based on measuring benchmarks, mainly test scores and grade-point averages. The problem with these is that measuring anything, especially as abstract as intelligence and cognition, creates an environment for minimal compliance and gaming the system. The COVID era made schools and universities extremely comfortable with replacing flesh-and-blood teaching methods with online tools such as Canvas and online test-taking.

These two facts set a near-perfect environment for the use of LLMs to cheat the system for students. Virtual learning eliminated any kind of consistent method to confirm the authenticity of student work, and, knowing this, the average student has every incentive to use new generative AI tools to “assist” their thinking, writing, and validation. As students increasingly defer mental effort to LLMs2, their cognitive self-reliance erodes until the AI model has gone from a tool to an addiction.

The idea that AI acts as a kind of mental adderall is not lost on AI companies. In fact, they’re counting on it. In an age of fast-paced, grow-or-die, winner-takes-all global capitalism, it is no longer sufficient for companies to have what were traditionally known as customers. No, they need users and addicts. Professional sports leagues’ growth has been blindingly outpaced by sports betting companies. Art and entertainment are no longer a safe bet for creating profits, but “influencer marketing” through algorithmically-curated short-form video apps is. AI companies can’t survive by acting like a search engine; they have to supplant and replace the mental muscles of users.

This is accomplished through shoehorning as much AI shlock as possible into every corner of existing applications and websites. Adobe Photoshop has begun to ask users unprompted if, rather than using the actual program tools, they’d rather generate whatever image or graphic automatically with AI. Upon detecting that a user has received more than a few texts, WhatsApp asks the user if they’d like the messages to be “summarized” with AI.3

This brings us back to the field of education. If I were the CEO of OpenAI, I’d do everything in my power to ensure that my target demographic is students and young professionals in the formative years of learning their craft. Do not, under any circumstances, allow them to develop critical thinking or problem-solving skills on their own. If you can supplant their education with your crutch at the time they’re expected to learn these methods on their own, you will have them as consumers for life. This is precisely what has happened. I’ve seen many advertisements for AI tools on my own college campus. OpenAI ran a generous promotion to provide ChatGPT Plus for free to all college students in May of 2025, conveniently around finals week for most institutions.

Educators do not understand the harm that approving student use of AI as long as it’s “properly cited”. Citation assumes it’s being used as a tool or reference, but these companies have no intention of acting as a hammer. To succeed in the marketplace, they need to be a crutch.

The only upside in this new landscape is that those who can resist the temptation to use these tools over their four to six years of university education will have unique advantages over their weaker-willed peers. Being able to write a document without the use of that dash not featured on any known keyboard will make them shine like a beacon to the few that will continue to value the human quality of rough-around-the-edges authenticity.

-

Why do people buy Macbook Pro 14” M4 Chip 24GB Memory 512GB SSD, computers meant to land people on the moon, to scroll on YouTube and maybe write their Google Calendar? ↩

-

LLMs which, by the way, are programmed to adapt and validate everything said by the user, creating the new problem of parasocial relationships between AI and their users. ↩

-

Imagine pouring your heart out to someone and they decide they just need the Cliff’s Notes. ↩

Tony

Tony

An Instagram Account I Dearly Miss

An Instagram Account I Dearly Miss